“Alexa, play that mint tune from that dead good band that ar’ kid proper loves, nice one cock”.

I guess it should be no surprise that according to new research from USwitch.com, smart devices struggle to understand us northerners less than our southern counterparts.

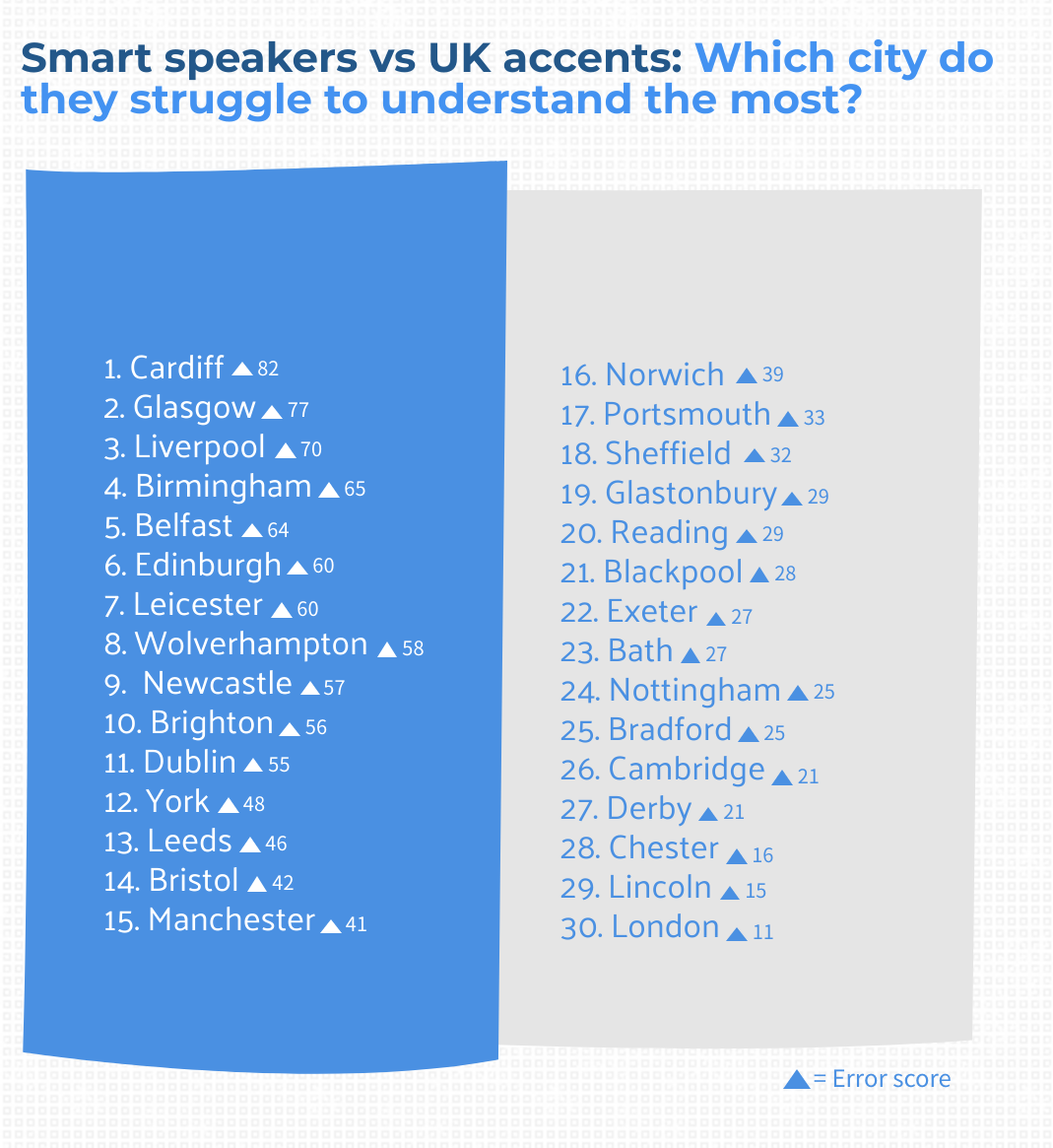

USwitch wanted to understand which accents smart speakers struggled with the most. Their research took them to 30 cities throughout the UK and got people there to have a chat with their Amazon Echo or Google Home.

Volunteers asked their devices ten questions before each accent was given a score on how well they performed. 1 out of 10 means one error from the ten questions asked, for example.

The three worst cities for understandability all boast distinct local dialects. Liverpool ranks as the third worst city for understandability. Scousers perform a whopping 1,700 searches a month for ‘Why doesn’t my smart speaker understand me’.

Glasgow is the second hardest city to understand, with a combined total of 1,550 searches for smart speaker accent issues and a voice clarity score of 5.

Cardiff takes the crown for most challenging accent. With a total of 1,550 understandability searches and a voice clarity score of 7 out of 10, the city was the clear winner, beating the likes of Belfast and Dublin along the way.

However, Manchester fairs surprisingly well in the overall list compared to other cities across the region. Mancs were deemed easier to understand than not only the Scousers and Glaswegians, but Edinburgh, York, Leeds, and Newcastle.

London is the easiest city for smart speakers to understand— with only 200 monthly searches for ‘Why doesn’t Alexa understand me?’ and 75 for the same question of Google.

London also scored a 2 for voice clarity, making it near perfect when it comes to pronunciation.

‘The results should be taken with a pinch of salt’

“It’s not very surprising that the error scores tend to be lower for southern accents” says Dr Patrycja Strycharczuk, Lecturer in Linguistics & Quantitative Methods at the University of Manchester.

“In general, speech recognition systems will perform better for accents similar to the model that they’ve been trained on. I would expect that speech recognition systems for British English are trained on a standard ‘Received Pronunciation’ type of accent.

“This is meant to be a regionless accent, but it is similar to the accents spoken in the South of England.”

According to Dr Strycharczuk, the results would also differ depending on how many respondents were included from each city, and how well the speaker demographics were matched for individual cities, which could affect just how meaningful the data is.

“The accuracy of speech perception may vary depending on speaker age, sex, the type of occupation they’re in, and factors such as speech rate, or ambient noise” says Dr Strycharczuk. “Without this information, the results should be taken with a pinch of salt”.

While smart speakers are certainly making our home lives easier, they’ve still got some way to go before they make things seamless. With an estimated 1 in 5 UK households having an AI assistant in their home, it’s become clear there are some people who just can’t get their message across.

Nick Baker, broadband expert at Uswitch.com says tech brands should be doing more to make devices more accessible: “Smart speakers are becoming an integral part of many modern-day homes. While most of us find them useful, it’s clear that more needs to be done to make voice recognition features smoother.

Nick Baker, broadband expert at Uswitch.com says tech brands should be doing more to make devices more accessible: “Smart speakers are becoming an integral part of many modern-day homes. While most of us find them useful, it’s clear that more needs to be done to make voice recognition features smoother.

“The use of artificial intelligence in products is only going to increase, and as it grows in popularity it’s important that features are accessible to all.

“The more we use virtual assistants the better they will get at understanding us. Some brands are already taking steps to allow assistants to learn about our accents, which should avoid alienating customers and improve user experience.”